Interview: the impacts of “smart” technologies on the lives of black and trans people

The historian Mariah Rafaela Silva shows how technologies replicate and accentuate racial and gender inequalities

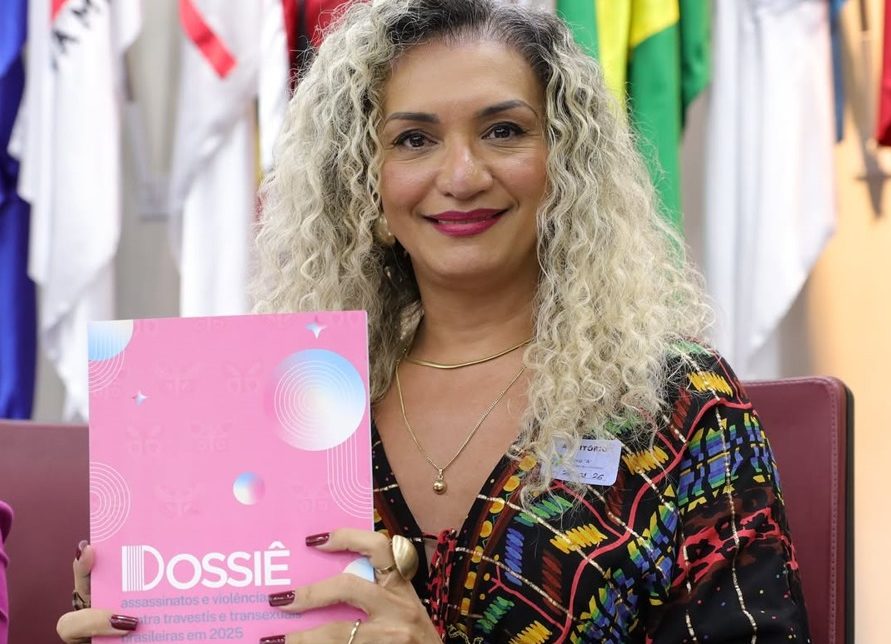

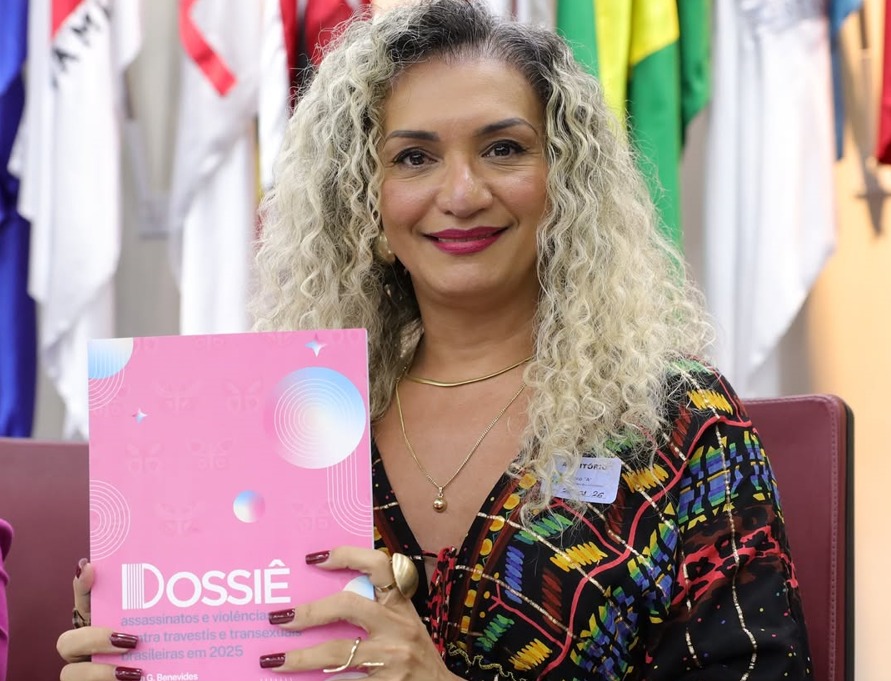

Produced in social contexts marked by racism and transphobia, so-called “smart” technologies, such as facial recognition programs used in public security around the world, can further deepen racial and gender inequalities. Addressing this problem starts with the recognition that these technologies are not neutral and that they serve various different interests. This is one of the considerations that the historian Mariah Rafaela Silva presents in her article “Navigating screens: the technopolitics of security, the smart paradigm and gender vigilantism in a data harvesting age”, published in issue 31 of the Sur Journal.

Mariah Rafaela Silva graduated in History of Art, holds a Master’s Degree in History, Theory and Cultural Criticism and is currently working towards a doctorate in Communications at the Federal Fluminense University. In addition, she has worked as a lecturer at the Department of History and Art Theory at the Federal University of Rio de Janeiro and studied gender, migration and globalization at the New University of Lisbon. She is also a social activist and a collaborator for the project O Panóptico. Mariah was one of the four grantees of the 31st issue of Sur Journal. Speaking to Conectas, she outlines some of the points made in her essay.

Read the interview:

Conectas: Talk about your essay. What considerations does it present?

Mariah Rafaela Silva – I have been working with human rights for over ten years. But lately I have been researching biometric technologies, such as facial recognition, retinal scanning and other so-called “smart” technologies, looking into how they impact the experiences of marginalized individuals – specifically black women, transsexual women and LGBTI people in favelas. In my essay, I discuss some of this work, my debates with other researchers, with other theorists and with other activists to consider how these “smart” technologies acquire a political dimension and, as such, configure and automate a certain process of subjectification in society, in particular with regard to transsexuals, transvestites and transsexual women. I also look at how these technologies determine norms, the pragmatics of life, based on a set of surveillance models – data surveillance, surveillance on the internet, surveillance cameras. [I analyze], therefore, how all this permeates our subjectivity.

Read more

Conectas: How do surveillance technologies discriminate against trans and racialized people?

Mariah Rafaela Silva – All technology is political in nature. Because of this and, obviously, other denser issues, technologies are not neutral. They have a historicity, in other words they also depend on certain narratives and certain interests, mainly economic and of course hegemonic, for their regimentation. This is where they impact the lives of transvestites, transsexuals and black people. Why? Because there is racism and transphobia underpinning the social relationship models and this reflects how technologies translate or reiterate these processes that are already part of society. In other words, a society that is underpinned by certain logics, certain racist and transphobic movements, when it builds its technologies, they tend to reflect these same principles. For example, when it comes to biometric technologies, specifically facial recognition technology, the algorithms are configured so that the faces of black people, in general, are not easily readable. In the case of trans people’s faces, these devices are likely to function in a binary way. When these technologies start to be applied within the scope of security, you have to be very careful with this high margin of error, which we call false positives. Most mistakes happen with black people and transexual people. Some examples: people who are taken to a police station and are detained because the device identified that person as being guilty of a crime they did not commit, or trans people who are unable to validate documents due to an error or identity conflict.

Conectas: What can civil society/human rights organizations do to alleviate this situation and successfully defend diversity and democracy in the problems you have raised?

Mariah Rafaela Silva – Civil society can play a fundamental role, but it needs to be invited to the debate. These technologies are sold based on certain interests and are sold as being miraculous for security. On paper, everything looks great, everything works wonderfully. But in fact, that’s not the case. What is not talked about is the cost of these technologies for people’s own safety when mistakes occur. Civil society organizations have an ethical duty to take a stand against this, against the narrative, against the lobby exclusively around security. There has to be transparency for the implementation of these technologies that presents the pros and cons to the population. Organizations should focus their efforts on reaching and creating dialogue that is effective for the people who are, in fact, providing lots of data but who are less able to build or contract protection equipment. Whether on your personal device, like your smartphone, computer or watch… or when you use your banking information. In doing so, we create a culture of debate, an honest debate, a frank and transparent debate about the effective impacts.