2024 Elections: disinformation causing real harm to democracy and people’s lives

According to experts, the phenomenon of disinformation, amplified by digital platforms, distorts the truth and undermines trust in institutions

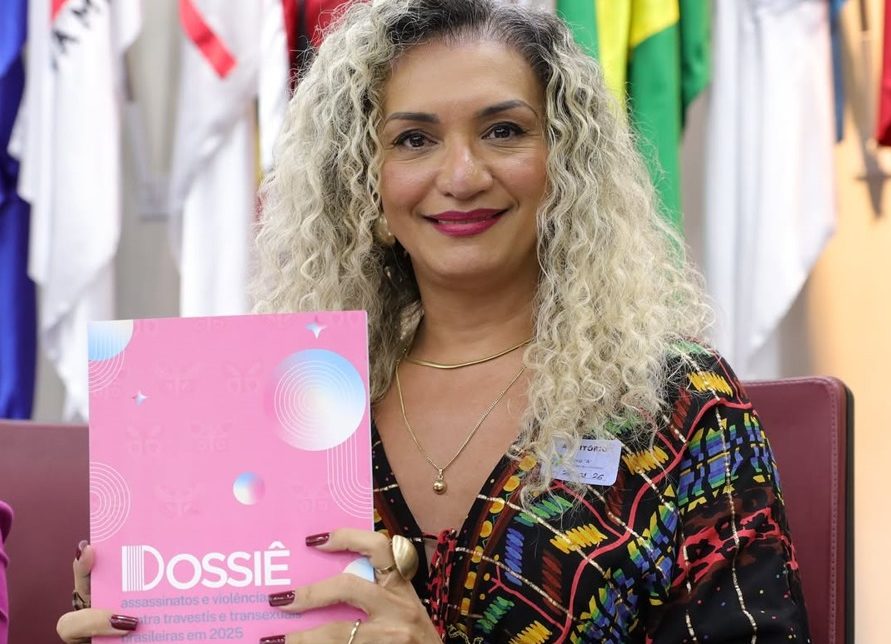

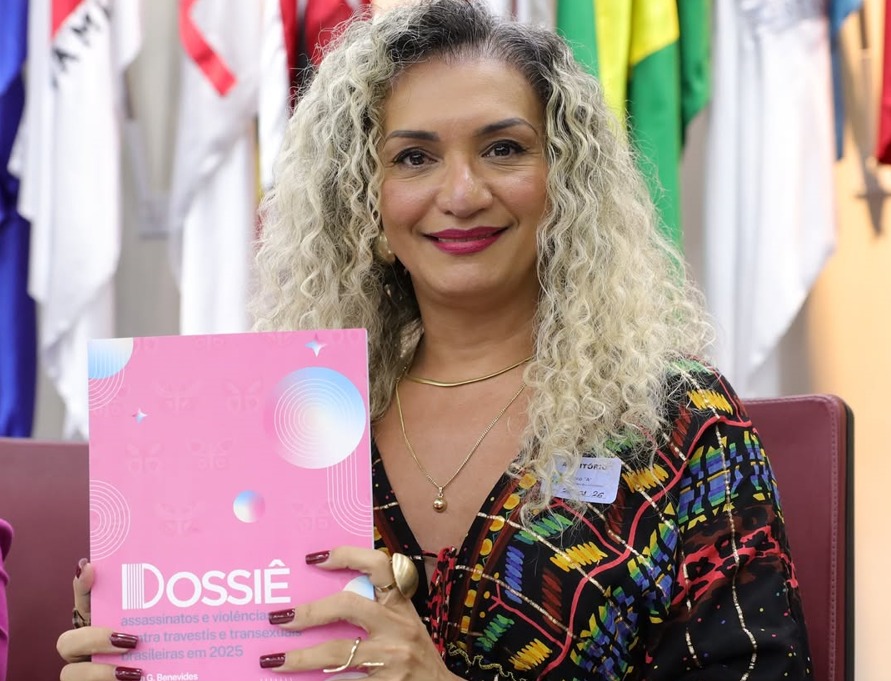

A pesquisadora Nina Santos. Foto: Arquivo pessoal

A pesquisadora Nina Santos. Foto: Arquivo pessoal

At a time when Brazil is preparing for the 2024 municipal elections, once again, disinformation and fake news are emerging as major threats to the integrity of the electoral process and the safety of the population. These phenomena, amplified by social media platforms, not only distort the truth but also undermine trust in institutions, create social divisions and put constitutional rights at risk.

The consequences of disinformation are especially severe for historically marginalized groups, such as women, Black people, and LGBTI+ individuals, who frequently become targets of coordinated disinformation campaigns, political violence and digital hate speech.

In an interview with Conectas, Nina Santos, a director at Aláfia Lab, Nina Santos presents an analysis of the main challenges Brazil will face in the upcoming elections, particularly regarding disinformation, while also exploring possible paths to ensure democracy amidst digital adversities. Santos holds a PhD in Communication and is a researcher at the National Institute of Science and Technology in Digital Democracy and at Panthéon-Assas University.

Read more

For her, “disinformation affects the very possibility or ability of people, especially women and Black people, to be in social spaces, whether in positions of power, political spaces or running for elections.”

Watch the interview with Nina Santos:

Conectas: What is the impact of disinformation on democracy and the expansion of constitutional rights in Brazil? Is it possible to say that historically marginalized groups, such as those based on race and gender, for example, are more affected?

Nina: Disinformation and hate speech are strongly linked to our social reality and these phenomena have gained momentum with the rise of digital communication. Digital communication does not create social phenomena itself, but it is affected by pre-existing issues in society, giving them new form and greater reach. This is the case with misogyny, racism and other issues.

When we talk about disinformation and hate speech in digital environments, we certainly need to consider that these phenomena disproportionately affect more vulnerable groups. Therefore, there need to be specific policies in place to ensure that these populations or social segments are protected.

Conectas: Still on the same topic as the previous question, how can disinformation be used as a tool of political violence based on gender and race?

Nina: Disinformation affects the very possibility or ability of people, especially women and Black people, to be present in social spaces, whether in positions of power, political spaces, running for elections or engaging in activities such as journalism or human rights advocacy.

Disinformation has very concrete effects on people’s lives. In a study carried out in 2021, we saw that one of the most often reported behavioral effects of online attacks on women journalists is self-censorship, where they start to restrict how they carry out their work in order to avoid certain risks. So, the effects of these attacks are very real.

Conectas: How do you evaluate the scenario of disinformation in the upcoming municipal elections compared to previous ones? Do you believe that the efforts made by public bodies will have positive effects?

Nina: Regarding the elections, yes, firstly, there are institutional efforts in place on several fronts to address the issue of disinformation, particularly by the Superior Electoral Court (TSE), which is the body responsible for electoral matters. However, in terms of legislation, we haven’t made any progress towards regulating digital platforms and we’ve even regressed in some areas, such as access to data from these platforms. It seems to me that the scenario we are facing in 2024 is very challenging. There is the additional issue of artificial intelligence that is emerging as a more popular and accessible tool, bringing new challenges, new formats, and new creative possibilities and requiring a different approach.

Conectas: How have digital platforms been behaving in this scenario? Have there been any advances or setbacks?

Nina: To some extent, digital platforms have been working on developing some strategies to combat disinformation and hate speech. In fact, since 2022 there has been a certain degree of concern from the platforms regarding this issue. However, there are two very worrying trends: the first, led by Elon Musk, involves reducing the focus, energy and investment in trust and safety teams, which are responsible for, among other things, minimizing harmful content on these platforms. This movement by Musk is rather a negative one. Taking Twitter, now X, in this direction could lead to other platforms being encouraged to follow the same path.

The second is a very reactive movement towards regulatory proposals. In Brazil, we have seen a very aggressive reaction on digital platforms, in relation to draft bill 2630, dubbed the Fake News bill. On some more than others, but I am speaking in the broad sense here.

Regarding the regulation of artificial intelligence, there’s a bit less resistance because there seems to be a consensus, even among the platforms that create and develop AI models, that regulation, at some level, is necessary. Despite this general perception or discourse that there is support for regulation or that regulation is important, few actual agreements have been reached, and therefore, legislation has not advanced.

Conectas: What are the main challenges for the 2024 elections, and which issues might be more susceptible to disinformation? What do you consider essential for the 2024 elections, and especially for preparing for 2026? What still needs improvement?

Nina: Regarding the 2024 elections, I believe a key challenge is the emergence of artificial intelligence, as we don’t yet fully understand what might happen. But we already have resolutions by the TSE with cases [related to AI] being judged.

One big challenge of the municipal elections is the fact that there are over five thousand elections simultaneously. Each one in its own unique local context. It’s very difficult to keep track of these processes. The focus tends to be heavily concentrated in large cities, especially in the Southeast. Therefore, cases of disinformation often go unnoticed.

And regarding progress for 2026, having legislation is certainly key. In my opinion until there is a broader and democratically constructed general regulation for these platforms, it will be very difficult to have a framework that goes beyond exceptional cases, those very serious incidents when action is taken. However, these measures are always designed for exceptional situations, not for ordinary ones.

But the use of the internet and social media is an everyday activity. We use them daily for many different purposes. Disinformation is part of this landscape, but there are many other uses. Most of the time, social media is not used to spread disinformation—perhaps it’s for sharing cat pictures or other casual content. Therefore, I believe we need to make progress in this area.

Conectas: The Global Coalition for Tech Justice has called on advertisers to join the fight to protect elections and human rights, in response to the negligence of Big Tech companies, especially following the numerous failures in protecting the 2024 electoral process. What is the importance of these efforts in the Global South, and how does disinformation impact electoral processes differently in these countries?” What are the differences in the actions of companies for elections in Europe and the United States compared to other parts of the world? How have platforms handled the elections in the Global South that have already taken place.

Nina: There is significant disparity in how companies treat countries in the Global South compared to the Global North. Access to data is a clear example of this: today, in order to access data from some platforms, you have to go through universities in the Global North, request authorization and obtain approval from these universities to gain access to the data. This seems absurd to me.

It is important to understand that digital platforms are also instruments of geopolitics. Therefore, global inequalities exist and digital platforms are primarily developed in the United States. It’s not even broadly within the Global North, it’s specifically in the United States.

They are also explicitly used to defend certain interests, as exemplified by what Elon Musk has done and continues to do with X. Furthermore, the very structure of these platforms embeds a particular worldview. So, I believe there is a sovereignty issue when discussing social media and the digital environment together. The issue of sovereignty seems to me to be an important aspect to explore.

More concretely, there are very striking differences in the actions taken by the platforms. For example, in 2022, in the United States, which was holding regional elections at the time, Meta prohibited online ads in the period leading up to election day. Meanwhile, in Brazil, the Electoral Justice also prohibited this, but the platforms did not comply. Access to data is another example, along with the availability of fact-checking tools and even the number of people working on content moderation in native languages.

So, there are a number of differences in how platforms are treated, not just in the back-end, the infrastructure behind the platforms, but also in how these platforms handle decisions and their relationships with institutions in other countries. I really consider this to be a central issue.